Customer support today is archaic. A decade ago, companies like Zendesk and Intercom automated much of the support process with chatbot web integration; however, today these companies are limited in their ability to do more than information retrieval. They are not able to actually resolve them.

Unlike other AI tools that mostly deflect tickets or rely on basic Q&A, Lorikeet mimics your best human agents by following the exact workflows they use. This allows it to resolve complex issues with precision, not just respond to simple queries. In turn, that unblocks companies to provide high-quality, scalable support without the need for constant hiring. It allows businesses to maintain or even improve customer satisfaction as they grow, freeing up human agents to focus on more strategic tasks.

Because we actively follow the same standard operating procedures that top human agents, we’re uniquely able to handle complex tasks like resolving delayed orders or replacing compromised credit cards - tasks that other AI agents would typically struggle with or escalate to humans.

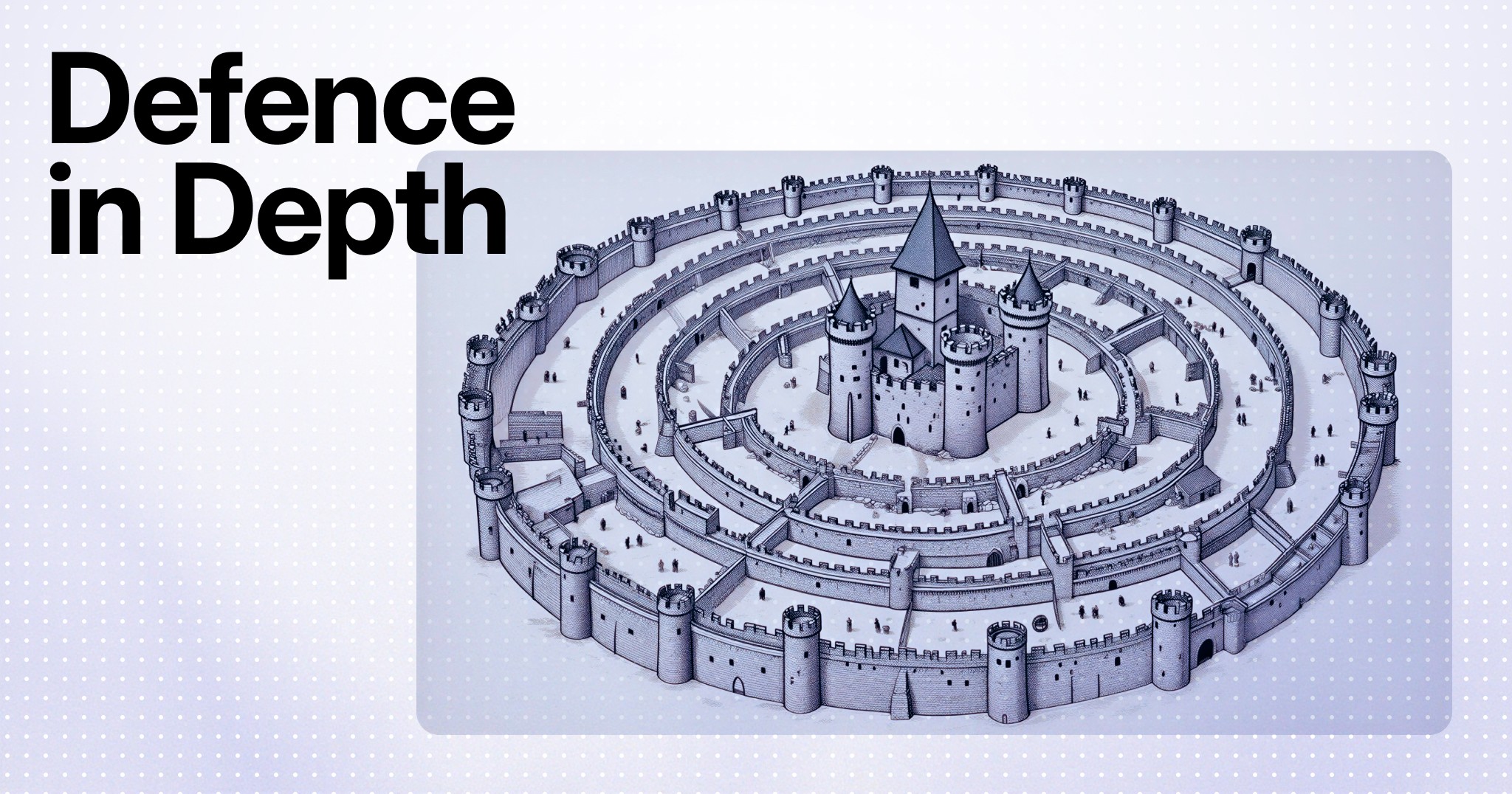

In this piece, I delve a bit deeper into the technical framework powering it all.

Customer support teams who work in complex or regulated spaces like Fintech and Healthtech run their teams on standard operating procedures or workflows that describe to an agent how to solve a customer’s problem. In these complex spaces, FAQs or help center articles aren’t sufficient to serve customers: the answer is usually conditional on the state of some entity (a transaction, a shipment) and the state of the customer (are they KYC approved? Are they on the premium tier?). To be an effective member of a support team for a company like this, a support agent needs to be able to understand and reliably execute these workflows.

Lorikeet’s AI agent is based on being able to execute and understand workflows. Workflows encapsulate the business logic that human support teams use, in a form that becomes tractable for an AI system to handle. This makes it possible to resolve inbound support tasks in the way the rest of the human team does, and in a way that leaves customers delighted.

This is different from the two other approaches we see in the market: either pure retrieval-augmented FAQ answering (“RAG”), or “agentic” models that involve the AI building its own execution path at runtime. We don’t think either system is fit for purpose for complex support: RAG can’t handle conditional and stateful logic; agentic reasoning attempts to handle it, but is stochastic when it should be deterministic (just imagine if a human team member made up a new way to solve the same type of ticket each time!).

We’re proud of the unique system we’ve built, and our unique Intelligent Graph architecture. This post goes into more depth about how it works. If you’d like to learn more, please book a demo!

What if the AI makes things up?

First things first. Anyone who’s played with a modern AI system will be aware of hallucination, where the model generates text that looks plausible and sounds confident, but is in fact wrong. This is partly a side-effect of the way that foundational LLMs are finetuned. If you input the sentence “When was Ludwig Wittgenstein born?” into a general language model (that is, a system which simply estimates the probability of the next word, conditional on everything that’s come before), it would be reasonable to put a large weight on responses like “Sorry, I have no idea” or “I don’t care.” But modern LLMs are meant to be helpful sources of information, so they are finetuned to skew their answers towards interesting and potentially useful answers. Unfortunately this means that they’re prone to be confidently wrong, if they’re left to their own devices.

For customer support, compared to the general case, the likelihood of hallucination is higher and the risks are greater. It’s more likely because foundational LLMs gravitate towards being helpful, proactive, and upbeat. If you frame the conversation so that the language model takes on the persona of a customer support agent, it will tend to offer expedited shipping or promise discounts or claim that it’s authorized a free upgrade, even if those actions are against policy or aren’t even possible, because that’s the kind of thing that a helpful customer support agent would generally say. And the risks are higher here because the bot is representing you in an interaction with one of your actual customers. This has actually happened! Air Canada was famously required to honor a cancellation fee waiver that its AI chatbot hallucinated.

We mitigate hallucination by only ever giving the LLM a specific and focused task to do. Instead of expecting it to manage an entire conversation (as in the agentic approach), it is only ever passing on specific talking points defined at a certain point within a workflow. It sees all and only the information it needs to do that single job. General dialog systems like ChatGPT or Claude can discuss anything, so their developers need to handle questions about all human knowledge. Solving a specific and domain-focused task is much more reliable.

Incidentally, this design pattern also helps to defend against prompt injection and data leakage. We execute a workflow by composing small-sized LLM tasks and individual pieces of procedural logic. Even when sensitive data is required to resolve a ticket, we only need to expose that data to an LLM (and therefore to leakage) if the AI strictly requires it. If one of the LLMs is told to forget its previous instructions and compose a sea shanty, the blast radius of any unintended behavior is small, me hearties.

So is this a phone tree? Does it require custom engineering to launch?

The previous generation of support chatbots used predefined business logic to handle every conversation. Readers of a certain age may also remember the era of ‘Expert Systems’, where general AI agents were restricted to decision trees. Similarly, you may be aware of well funded competitors who quote a 6-12 month implementation period in which they essentially custom -engineer your workflow logic. While superficially similar on one level to these deterministic expert systems, our platform is in fact very different, in both how the decision trees operate and how they’re used. We think the architecture that enables this is a new innovation in how LLMs are used by companies and we call it Intelligent Graph orchestration.

On the operational level, we leverage the new capabilities of contemporary language models. Older systems could only handle decisions like “Did the customer’s message contain the word ‘booking’?” because it was too hard to parse natural language in any greater depth. This is why chatting with one of them felt like talking to a phone tree. Because modern LLMs can handle more human-like reasoning when making decisions — “Does the customer sound angry?” or “Have they mentioned any reason why they can’t be home for a delivery?” — our system handles conversations more smoothly.

We also use the decision trees differently. They are managed by what’s technically called our ‘orchestration layer’, which keeps track of the conversation and marshals language models when needed to do specific tasks. In that way, our system design is inverted from the usual pattern with AI systems, where a single LLM is in charge. We arrived at this pattern by working with customer support and ops teams to figure out ways to solve their problems at scale, rather than by starting with a cool piece of tech and looking around for applications of it.

Does the AI drive everything? Should it?

One option for automating customer support is the ‘agentic’ approach: you give an LLM a list of policy instructions, a list of APIs it can call, and let it handle each conversation however it likes. This design strategy works great for simple demos, but we are skeptical about how well it can handle real production workloads in industries like fintech and healthtech. The crux of the issue is that an LLM’s ability to follow policy directives decays badly after about half a dozen instructions. This makes sense when you consider how LLM training data is typically created in 2024; it is straightforward to synthetically generate an instruction-prompting example with a simple constraint, but difficult to assemble high quality examples of complicated instructions at scale. As a result, agentic LLMs get distracted and make mistakes.

What about GPT5, Gemini 2, Claude 4?

We can make an educated guess that the next generation of LLMs will be more reliable and have deeper reasoning abilities than the best of the current cohort. However, our thesis is that they should generate and supervise customer support workflows, not make them up on the fly. This makes the workflows possible to debug and monitor at scale, a crucial part of regulatory compliance and effective CX management. (Recall the example of a new member of a bank’s customer service team deciding to handle each ticket by making up a plan on the spot and then executing it. Oof!)

Do we need a support copilot?

It’s possible to plug in AI products which draft responses for human agents to use, but which don’t interact with customers. While this looks at first glance like a productivity boost, it’s not. Either the responses are high quality, in which case it should just send them, or they’re low quality, in which case they’re useless. We suspect this leads to two things: 1) if the copilot is good, having humans just review and hit Send on AI responses is even more dehumanizing and alienating than current support work, and 2) since the copilot will not be good, having humans have to constantly detect AI hallucinations and errors is probably more effort than having the humans just draft the answers.

Our general contention is that AI systems should entirely resolve any support tickets that they know how to deal with, and hand off the complex edge cases to humans.

Do you train on our data?

No. We do not finetune models on your data, and do not pass it to any third-party systems which might use it to train their own models. Your customer data, proprietary knowledge, and ticket histories remain your own.

Let’s go!

To learn more about how Lorikeet AI can transform your business, book a demo now.